收集Kubernetes日志

容器特性给日志采集带来的难度:

• K8s弹性伸缩性:导致不能预先确定采集的目标

• 容器隔离性:容器的文件系统与宿主机是隔离,导致 日志采集器读取日志文件受阻

应用程序日志记录体现方式分为两类:

• 标准输出:输出到控制台,使用kubectl logs可以看到

• 日志文件:写到容器的文件系统的文件

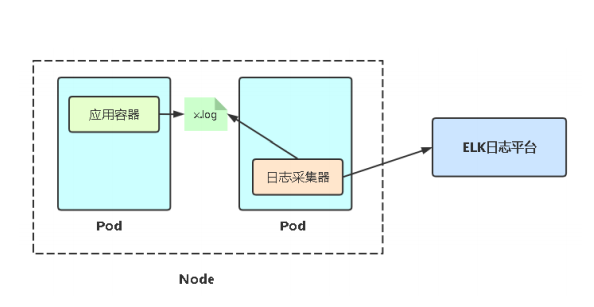

针对标准输出:以DaemonSet方式在每个Node 上部署一个日志收集程序,采集 /var/lib/docker/containers/目录下所有容器日志。

docker容器本地日志文件:/var/lib/docker/containers// -json.log

通过将/var/lib/docker/containers// -json.log 挂载到日志采集器中(pod),这样就可以采集到所有容器的日志了。

kubectl log标准输出流程:

kubectl log -> apiserver -> kubelet ->docker ->*-json.log

kubectl log请求apiserver,apiserver访问kubelet, kubelet调用docker,docker读取的是*-json.log文件

k8s上部署nginx服务:

kubectl describe node |grep Taint kubectl taint node k8s-master node-role.kubernetes.io/master- kubectl describe node |grep Taint kubectl create deployment web --image=nginx kubectl get pod -n kube-system kubectl describe pod web-5dcb957ccc-7w784 kubectl get pods -o wide curl 10.244.0.4 kubectl logs web-5dcb957ccc-7w784 -f docker ps |grep web

k8s上部署filebeat采集器 :

vim filebeat-kubernetes.yaml

#修改推送到logstash的IP地址

[root@k8s-master ~] --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: kube-system labels: k8s-app: filebeat data: filebeat.yml: |- filebeat.config: inputs: path: ${path.config} /inputs.d/*.yml reload.enabled: false modules: path: ${path.config} /modules.d/*.yml reload.enabled: false output.logstash: hosts: ["192.168.0.11:5044" ] --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-inputs namespace: kube-system labels: k8s-app: filebeat data: kubernetes.yml: |- - type : docker containers.ids: - "*" processors: - add_kubernetes_metadata: in_cluster: true --- apiVersion: apps/v1 kind: DaemonSet metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: serviceAccountName: filebeat terminationGracePeriodSeconds: 30 containers: - name: filebeat image: elastic/filebeat:7.9.2 args: [ "-c" , "/etc/filebeat.yml" , "-e" , ] env : - name: ELASTICSEARCH_HOST value: elasticsearch - name: ELASTICSEARCH_PORT value: "9200" securityContext: runAsUser: 0 resources: limits: memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml - name: inputs mountPath: /usr/share/filebeat/inputs.d readOnly: true - name: data mountPath: /usr/share/filebeat/data - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true volumes: - name: config configMap: defaultMode: 0600 name: filebeat-config - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: inputs configMap: defaultMode: 0600 name: filebeat-inputs - name: data hostPath: path: /var/lib/filebeat-data type : DirectoryOrCreate --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: kube-system roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: filebeat labels: k8s-app: filebeat rules: - apiGroups: ["" ] resources: - namespaces - pods verbs: - get - watch - list --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat ---

查看pod

kubectl apply -f filebeat-kubernetes.yaml kubectl get pod -n kube-system

访问nginx服务

kubectl get pod -o wide curl 10.244.0.4

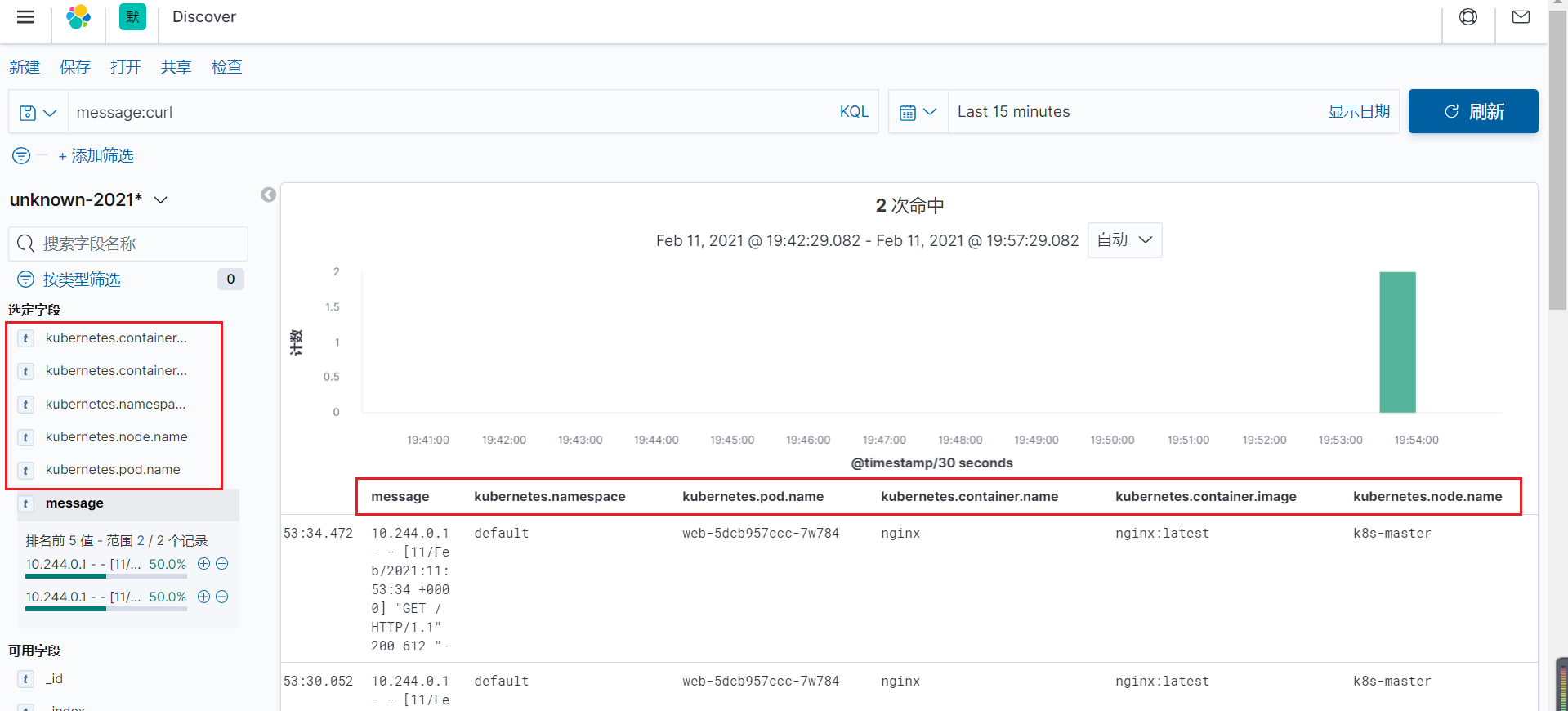

在kibana页面上查看

从上面我们可以看出,filebeat采集到了k8s容器日志,比如这条日志属于哪个容器,哪个pod,哪个命名空间,哪个node节点等等日志来源,因为它内置了k8s容器相关的模块。

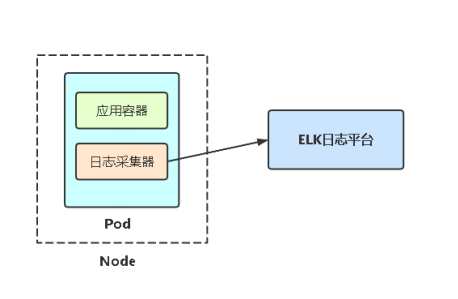

针对容器中日志文件:在Pod中增加一个容器运行 日志采集器,使用emtyDir共享日志目录让日志采 集器读取到日志文件。

通过pod内部多个容器共享数据卷的形式采集到应用容器的日志。

k8s上部署nginx和filebeat容器

vim nginx-deployment.yaml

#修改推送到logstash的IP地址

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-log-demo namespace: default spec: replicas: 3 selector: matchLabels: project: microservice app: gateway template: metadata: labels: project: microservice app: gateway spec: containers: - name: nginx image: lizhenliang/nginx-php ports: - containerPort: 80 name: web protocol: TCP resources: requests: cpu: 0.5 memory: 256Mi limits: cpu: 1 memory: 1Gi livenessProbe: httpGet: path: /status.html port: 80 initialDelaySeconds: 20 timeoutSeconds: 20 readinessProbe: httpGet: path: /status.html port: 80 initialDelaySeconds: 20 timeoutSeconds: 20 volumeMounts: - name: nginx-logs mountPath: /usr/local/nginx/logs - name: filebeat image: elastic/filebeat:7.9.2 args: [ "-c" , "/etc/filebeat.yml" , "-e" , ] resources: limits: memory: 500Mi requests: cpu: 100m memory: 100Mi securityContext: runAsUser: 0 volumeMounts: - name: filebeat-config mountPath: /etc/filebeat.yml subPath: filebeat.yml - name: nginx-logs mountPath: /usr/local/nginx/logs volumes: - name: nginx-logs emptyDir: {} - name: filebeat-config configMap: name: filebeat-nginx-config --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-nginx-config namespace: default data: filebeat.yml: |- filebeat.inputs: - type : log paths: - /usr/local/nginx/logs/access.log fields_under_root: true fields: project: microservice app: gateway output.logstash: hosts: ["192.168.0.11:5044" ]

解释:

将filebeat配置文件存放在configmap中,在创建容器时将configmap挂载到日志采集器容器中,filebeat就通过配置文件可以采集日志。

通过emptyDir数据卷将应用容器中的日志目录映射到宿主机上,又将宿主机上的日志目录映射到日志采集容器中,这样日志采集器就可以在本地采集日志了,就像你在宿主机上采集日志一样。

查看pod

kubectl apply -f nginx-deployment.yaml kubectl get pods

访问nginx服务

kubectl get pod -o wide curl 10.244.0.8/status.html

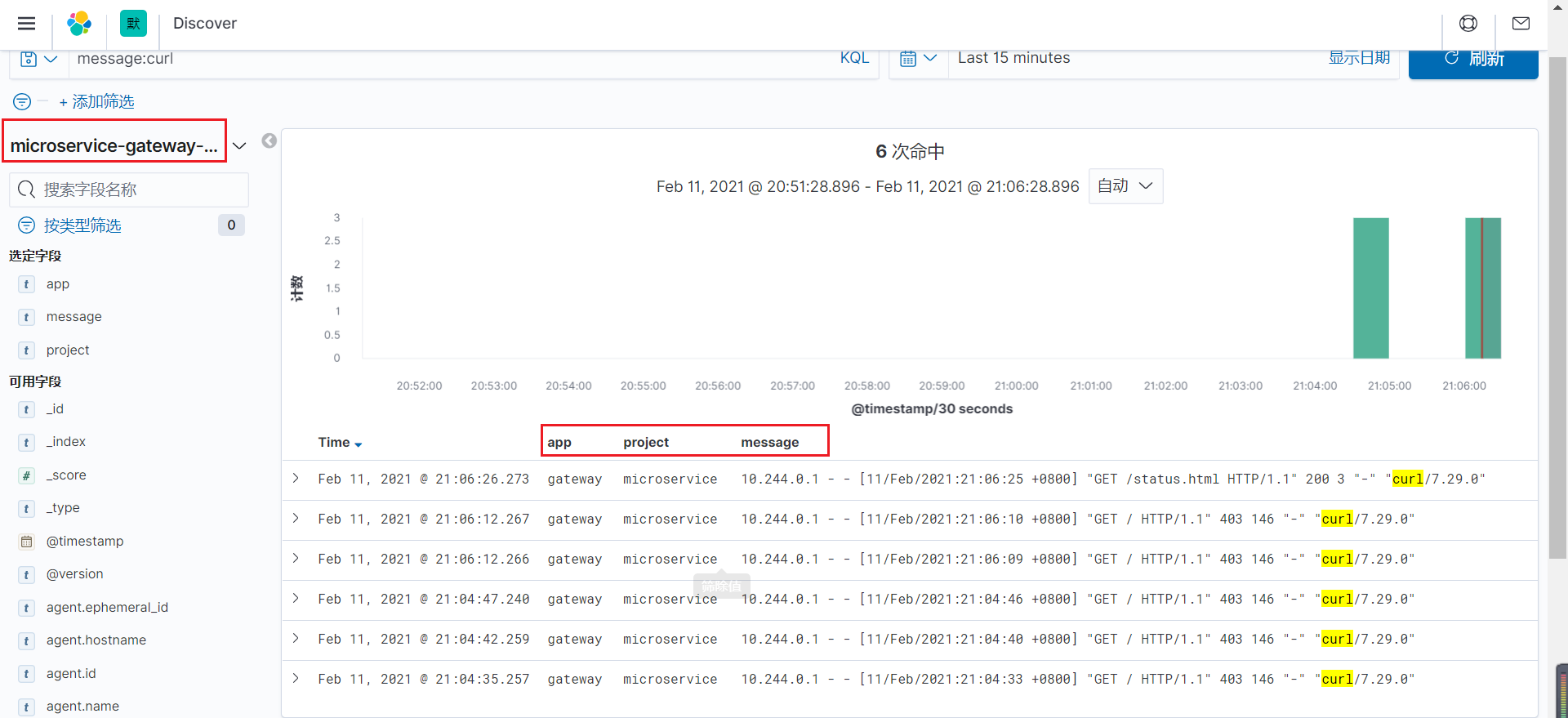

在kibana页面上查看

进入容器内部查看日志

kubectl exec -it nginx-log-demo-86b84c65b8-xwgsn -c nginx -- bash ls /usr/local/nginx/logs/access.log error.log kubectl exec -it nginx-log-demo-86b84c65b8-xwgsn -c filebeat -- bash ls /usr/local/nginx/logs/access.log error.log

总结:

针对标准输出:在每个node上部署一个日志采集器(filebeat),它采集宿主机上docker接管标准输出的日志目录,并且利用filebeat对docker,k8s这方面的处理器,对这个日志源打一定的标记。

针对容器中日志文件:在pod中增加一个容器运行日志采集器(filebeat),使用emptyDir数据卷去共享应用容器的日志目录,让filebeat容器能够读取到这个日志目录。

参考链接:

https://www.cnblogs.com/jetpropelledsnake/p/10906335.html

https://www.cnblogs.com/musen/p/13306395.html